PBS Home

Providing Support for PBS.org

Featured This Week

PBS Presents

PBS Nominated in 28th Annual Webby Awards

Now is your chance to vote for which nominees will win so vote for your faves (us!) in all the categories where PBS appears!

PBS Presents

7 Women Inventors in STEM

These women inventors have changed the way we eat and cook today.

PBS Presents

Learn How to Make a Pie with PBS

Find recipes and how-to videos for making your next best pie!

PBS Presents

Continuing Coverage From PBS

PBS has a variety of content to keep you informed on current events.

Popular Videos

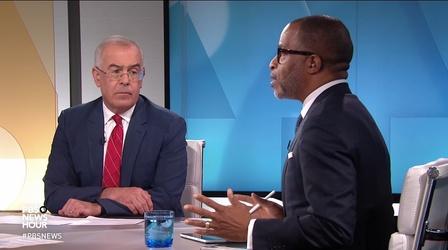

Brooks and Capehart on Democrats saving Speaker Johnson

Brooks and Capehart on if Democrats will save Johnson's speakership

Brooks and Capehart on Democrats saving Speaker Johnson

Support for PBS.org provided by: